The primary focus lies on the emerging paradigm of instruction tuned LLMs, which have garnered substantial attention in recent research and practical applications due to their ability to follow instructions.

These advanced LLMs are fine-tuned with specific inputs and outputs, optimized to follow instructions effectively, and improved using reinforcement learning from human feedback (RLHF). They offer ease of use, safety, and alignment, making them the preferred choice for most applications today.

A critical aspect of working with instruction-tuned LLMs is the art of ‘prompting.’ Here, clear and specific instructions are given, akin to instructing a smart person unaware of your task’s specifics. It emphasizes the need to be clear about the text’s focus and desired tone, aligning with two key principles of effective prompting: clarity in the instructions and giving the model time to think.

However, while LLMs hold promise, they have limitations. For example, they sometimes venture into obscure topics, generating plausible but inaccurate outputs. Current efforts are actively addressing these weaknesses to ensure more reliable application development with LLMs.

The first steps in unlocking the full potential of LLMs involve the following:

Setting up the OpenAI Python library: To use the GPT models (a type of Large Language Model) provided by OpenAI, it’s typically necessary to use the OpenAI Python library, which provides the API bindings needed to interact with the models. This includes methods to send prompts and receive responses.

Incorporating the OpenAI API key: To interact with OpenAI’s models, you typically need an API key, which associates your requests with your account and allows OpenAI to track usage for billing and rate-limiting purposes. This key must be included in your environment so the library can use it when making requests.

Utilizing LLMs effectively often requires understanding how to format prompts (which can involve delimiters or structured outputs) and managing the model’s behavior (which might involve few-shot prompting or other techniques). OpenAI and the broader AI research community provide various guidelines and best practices. Still, there’s also a significant amount of room for individual experimentation and fine-tuning based on the specifics of a given task or application.

Iterative Prompt Development

Iterative Prompt Development is crucial when building applications with Large Language Models (LLMs). Contrary to the misconception that the initial prompt should be perfect, the core concept is to refine the prompt progressively through iterations. The goal is to develop a prompt that works effectively for the desired task, much like the iterative nature of machine learning model development.

The process begins with an idea for a task the application should accomplish. Then, the first attempt to craft a prompt is made, focusing on clarity and specificity and allowing the LLM sufficient time to process the task. After running the initial prompt, an analysis is performed based on the output. This feedback loop of identifying any shortfalls, refining the idea, and updating the prompt is repeated until a prompt that adequately serves the application’s needs is created. The key lies not in finding a perfect prompt on the first attempt but establishing an effective process for developing task-specific prompts.

To illustrate this process, consider the task of summarizing text. The initial prompt might request the LLM to create a description based on a given text. The output is then evaluated, with refinements made to the prompt for various aspects, such as controlling the output’s length, focusing on specific details, or specifying characteristics to emphasize. This ongoing modification process helps to get closer to the optimal prompt for the application.

It’s also important to test prompts against a larger set of examples, especially for mature applications, and try different variations to ensure a robust and versatile application.

Iterative Prompt Development is a systematic approach that focuses on a continuous improvement process, enabling developers to create more effective prompts for their specific applications with LLMs.

Text Summaries

Text Summarization

Text summarization is an application of Natural Language Processing (NLP) that focuses on creating a concise yet representative version of a longer piece of content. In essence, this task involves condensing the main points of a given text into a shorter summary, allowing users to grasp the gist of the content without needing to read the entire piece.

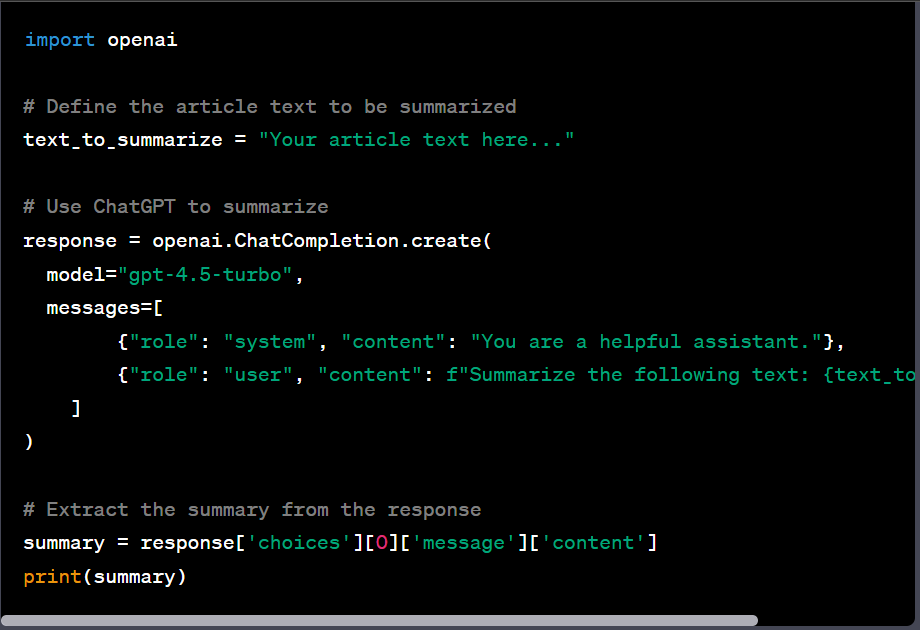

Utilizing ChatGPT for Summarization

ChatGPT, an AI model developed by OpenAI, has been trained on a large corpus of text data and is well-equipped to handle this task. You can use the ChatGPT web interface to summarize articles, enabling you to digest more content quickly. For a more systematic approach, consider implementing the summarization process programmatically. Here’s how you can get started:

Controlling the Length of the Summary

You can influence the length of the generated summary by controlling the character count or the number of sentences. However, remember that shorter summaries may not capture the depth and nuance of the original text.

Special Purpose Summaries

For specific purposes, consider tailoring your summary prompt to cater to individual user needs. For example, different prompts might generate varying summaries, enhancing the user experience and perceived value.

Information Extraction

Apart from summarizing, ChatGPT can be used for information extraction from the text. This could be beneficial in cases where specific details are of higher importance than the overall gist of the content.

Batch Summarization

ChatGPT’s summarization abilities can also be extended to multiple pieces of text. For instance, you could loop over multiple reviews and generate concise summaries for each, aiding in a quick understanding of the overall sentiment and details. This becomes especially valuable in applications dealing with large volumes of text data, allowing users to quickly get a sense of what’s in the text and dive deeper if desired.

Information Extraction

Understanding Information Extraction

Information extraction is a subtask of Natural Language Processing (NLP) that focuses on automatically extracting structured information from unstructured text data. This could involve extracting labels, recognizing named entities, understanding the sentiment, inferring topics, and more.

Traditional Machine Learning vs. Large Language Models

Traditionally, extracting information such as sentiment or named entities from text required a unique machine learning model for each task. Furthermore, these models needed separately labeled datasets, training, and deployment processes, a time-consuming and labor-intensive task.

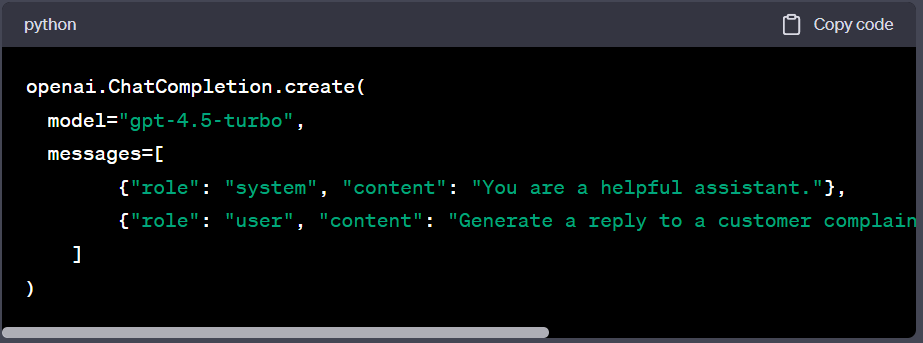

In contrast, large language models like GPT-4.5-turbo bring a multitude of advantages. These models can perform a range of tasks simply by using a relevant prompt, eliminating the need for separate models for each task. This flexibility and simplicity speed up the application development process significantly.

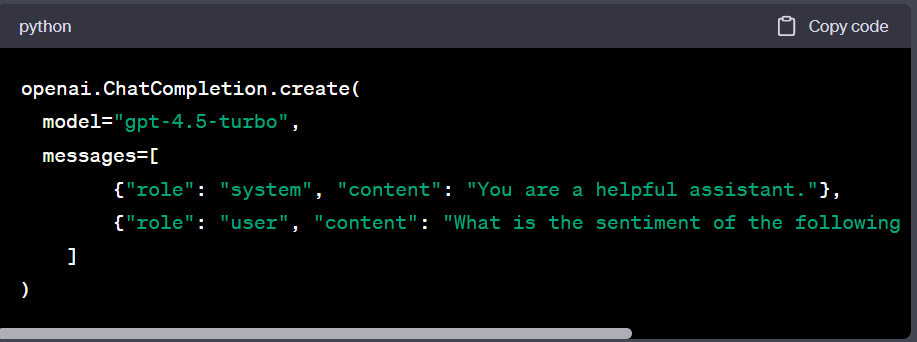

Performing Information Extraction with ChatGPT

To extract information using ChatGPT, you only need to craft a prompt specifying your requirement. For instance, to analyze the sentiment of a product review, you could write:

Topic Identification and Zero-Shot Learning

In the context of using Large Language Models (LLMs), you can craft prompts to extract multiple types of information from a single piece of text. For example, if you have a product review, you could design a prompt that not only extracts the review’s sentiment but also identifies the product features the review discusses.

The term “Zero-Shot Learning” refers to the ability of a model to understand and perform tasks that it hasn’t explicitly seen during training. When using LLMs, this concept is often utilized in topic identification. LLMs can infer or classify a given text’s topic without seeing any examples of the specific topic during their training. This is done by crafting a prompt that instructs the model to identify the topic of the text.

For example, suppose you give a model a text about hiking routes and ask it to identify the main topic. In that case, it can correctly respond with “hiking routes” or something similar, even though it wasn’t specifically trained on a dataset labeled with that topic. This is a powerful aspect of LLMs like GPT-4, as it allows them to be used for a wide range of tasks without requiring task-specific training data.

Transforming Responses for Further Processing

To make the large language model output more consistent and amenable to further processing, consider formatting your response as a JSON object. Once transformed, this output can be loaded into a Python dictionary or other data structure for subsequent analysis.

Powering Up Software Applications with Large Language Models

You can efficiently perform complex natural language processing tasks by incorporating large language models into your software applications. Be it inferring sentiment, extracting names, or identifying topics; these models can generate insights and add significant value to your application, speeding up development and making natural language processing more accessible to both experienced developers and newcomers.

Text Transformation

Understanding Text Transformation

Text transformation refers to taking a piece of text and altering it into a different format. With generative AI capabilities, text transformation can include language translation, grammar and spelling correction, and format conversion.

Language Translation with Large Language Models

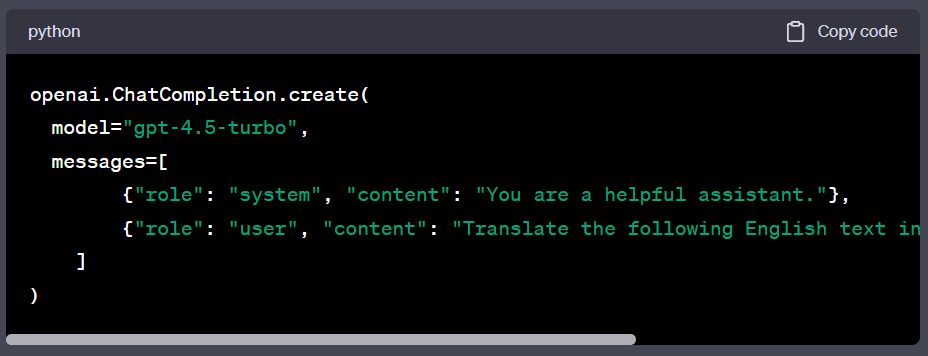

Generative models like ChatGPT, trained on vast amounts of text data in numerous languages, are well-equipped to perform language translation tasks. They can translate a given piece of text into multiple languages simultaneously. However, the accuracy and fluency of translation can vary depending on the language pair and the specific content.

To utilize ChatGPT for translation tasks, you could craft a prompt such as:

Creating a Universal Translator

In scenarios where user inputs may arrive in various languages (e.g., in a global delivery company), you can use ChatGPT to build a ‘universal translator‘. This application will process user messages in numerous languages and translate them into a chosen language, aiding in universal comprehension.

Tone Transformation

The tone of a piece of writing can significantly influence how it’s perceived by different audiences. ChatGPT can also assist in adjusting the tone of a text, making it more formal, casual, or anything in between, depending on the intended audience.

Format Conversion

ChatGPT can help convert between formats such as JSON, HTML, XML, Markdown, etc. This is particularly useful in scenarios where data needs to be transformed between various forms for different processing stages.

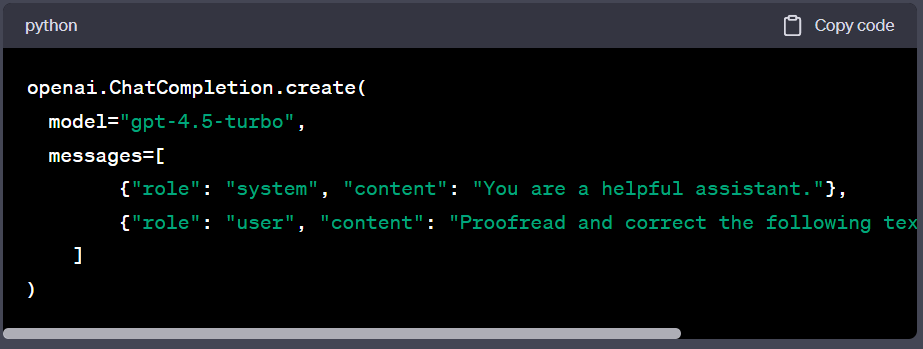

Grammar and Spelling Correction

ChatGPT is also proficient at proofreading and correcting grammatical and spelling errors in a text. This feature can be a boon, especially when working in a non-native language. The prompts for this task can be iteratively improved to ensure the model reliably corrects the errors.

Here’s an example of a prompt that can be used for proofreading:

Using Redlines Python Package

The Redlines Python package is a useful tool for finding the differences between two pieces of text– to compare the original text and the corrected version returned by the AI, offering a clear view of the corrections made.

By incorporating these text transformation capabilities into software applications, developers can significantly enhance the functionality and versatility of their solutions, opening up new possibilities in natural language processing.

Text Expansion

Understanding Text Expansion

Text expansion involves transforming a shorter text into a more detailed and lengthy composition. Large language models, such as ChatGPT, are proficient at this task and can help generate personalized messages, comprehensive emails, blog posts, and more based on simple prompts or brief outlines.

Generating Personalized Messages

One use case for text expansion is generating customized messages. For instance, the AI could craft a personalized response to a customer complaint in a customer service scenario. In these instances, the model can consider the sentiment of the customer’s complaint and use it to generate a contextually relevant and empathetic response.

Here’s an example of how you could generate such a response:

Incorporating Transparency

When generating text that will be shown to users, it’s crucial to be transparent that an AI created the content. This is an important aspect of ethical AI deployment.

Controlling Output Variety with the Temperature Parameter

The ‘temperature’ parameter in generative AI models influences the randomness or exploration factor in the model’s responses. Higher temperature values result in more diverse outputs, while lower values lead to more predictable and consistent outputs.

In applications requiring consistent, reliable responses, it’s recommended to use a lower temperature value (even zero). On the other hand, if the application involves creative tasks that benefit from various responses, a higher temperature value may be preferred.

Text expansion can be a powerful tool when integrated into software applications. It generates detailed, contextually relevant text from simple prompts or brief outlines, enabling numerous use cases, from personalized messaging to generating comprehensive written content. Furthermore, through proper parameter tuning and ethical considerations such as transparency, developers can significantly leverage text expansion to enhance their software applications.

Designing a Helper Function

A helper function that encapsulates the request setup and response extraction is a typical example. It simplifies the interaction with the model, abstracting away the specifics of the request and response process. This makes the code easier to understand and reuse.

You can expand this helper function or create additional ones for more complex scenarios. For instance, you might need a function to maintain the state of a conversation, manage tokens to prevent exceeding the model’s maximum token limit, or handle different models and parameters.

The design of these helper functions, though, will depend largely on the specific needs and complexity of your chatbot or application. Different applications might have different requirements, which would influence the design and complexity of the helper functions.

So, while not a requirement, helper functions are a useful and often recommended practice in software development, including when working with large language models.

Key Take away

- Large Language Models (LLMs) are transformative tools that can swiftly build software applications, perform tasks like text summarization, inference, transformation, and expansion, and can be integrated into products via API calls.

- Instruction-tuned LLMs, optimized to follow instructions effectively through reinforcement learning from human feedback (RLHF), have become the preferred choice for many applications.

- Working with LLMs involves mastering the art of ‘prompting’ with clear and specific instructions.

- Setting up the OpenAI Python library and integrating the OpenAI API key are foundational steps in leveraging the power of LLMs.

- LLMs come with limitations, including sometimes producing plausible but inaccurate outputs. Ongoing research is working towards addressing these weaknesses.

- Iterative Prompt Development, refining prompts progressively through iterations, is essential when building applications with LLMs.

- Information extraction with LLMs can replace traditional, task-specific machine learning models, speeding up the application development process significantly.

- LLMs can perform tasks such as sentiment analysis, topic identification, language translation, tone transformation, format conversion, grammar and spelling correction, and more.

- In text expansion, LLMs can generate personalized messages, comprehensive emails, blog posts, and more based on simple prompts or brief outlines, with applications requiring attention to transparency and the tuning of the ‘temperature’ parameter.

- Incorporating LLMs into software applications can significantly enhance functionality and versatility, opening new possibilities in natural language processing.