In the age of information, both organizations and individuals are heavily investing in web content creation as a means to stand out and bolster their marketing strategies. Now, more than ever, content is at the heart of competitive strategies.

The advent of generative AI and large language models (LLMs) has revolutionized the world of content creation. These tools have become indispensable for enhancing productivity and quality, setting new industry standards, and reshaping conventional practices.

However, there are growing concerns among website owners and content creators. Companies like OpenAI and Anthropic might use their content to train these models. The fear is that these companies are crawling sites and incorporating the gathered data into datasets, which are then used to train and improve their LLMs and products.

Some of the world’s leading websites are taking measures against AI bots like OpenAI’s GPTBot. There have been instances of legal actions being taken for unauthorized content use.

Conversely, content-rich platforms such as Reddit and Stack Overflow, which house vast knowledge bases, are exploring ways to monetize their content by charging AI companies for access. Given that these platforms contain curated content from subject matter experts, they are invaluable resources for training AI models.

Without clear rules, ethical guidelines, and governance on LLMs’ use, limitations, and behaviors, many organizations play safe to minimize potential risks. They are blocking web crawlers from entities like OpenAI and Common Crawl.

So far, as there are no rules, ethics, clear governance, and doubts about LLMs’ weaknesses and behaviors, many organizations are taking precautions and protecting themselves by blocking web crawlers from OpenAI and Common Crawl.

Preventing AI Bots from Accessing Your Website Content

Opting out isn’t always straightforward. We’ll first introduce some essential concepts and available options to guide you. With this knowledge, you can consult your web development team to implement actions to block AI bots effectively.

These measures primarily aim to safeguard your website from unwanted access by web crawlers and AI bots.

This can be achieved by employing the robots.txt file to block AI bots like GPTBot. Additionally, there are techniques to prevent public crawlers, such as Common Crawl Bot (CCBot) and WebText2, from accessing your site.

While removing your information from existing datasets is impossible, you can prevent new bots from crawling your content. There are also methods to opt out of crawling entirely, ensuring that LLMs can’t scrape your content.

What is a User Agent?

A “User Agent” is an identifier that web clients, such as browsers or bots, use when requesting web servers. Just as web browsers have unique user agent strings that inform servers about the type of software accessing the content, bots like GPTBot also have their distinct user agent string.

Website administrators who prefer to restrict AI bots from accessing, scraping, or crawling their sites can establish rules to block requests originating from certain user agents. This is often accomplished through the robots.txt file.

Robots.txt is a standard protocol websites adopt to communicate directives to web crawlers and other web robots. By setting up specific rules in this file, administrators can guide bots on which pages or sections of the site they should avoid processing or scanning.

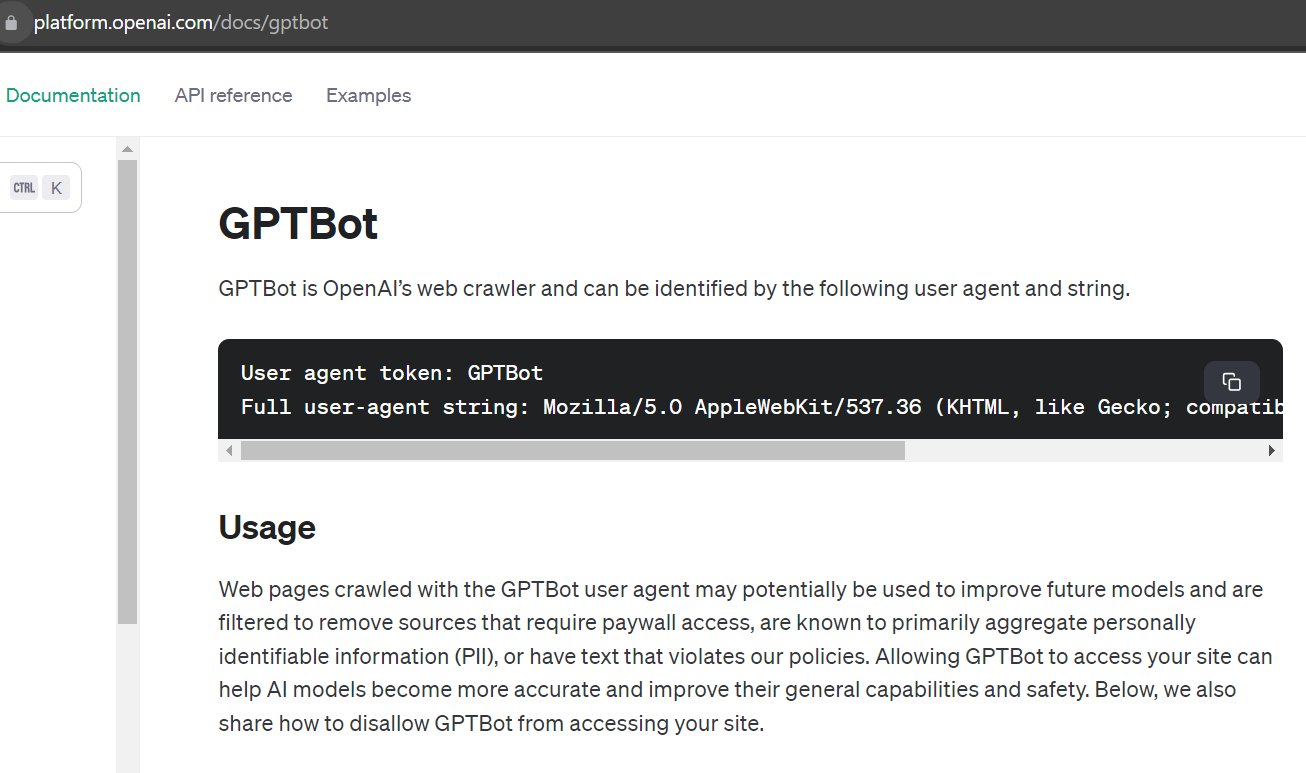

Blocking GPTBot

GPTBot, a new web crawling tool from OpenAI launched in 2023, is designed to scrape web content for training the Chat GPT-4 model and GPT-5 (currently on trademark processing). This platform carefully searches the internet to enhance AI technology’s precision, performance, and security.

Identifying GPTBot:

- User Agent Token: GPTBot

- Full User-Agent String: User-agent token: GPTBot

- Full user-agent string: Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.0; +https://openai.com/gptbot)

Blocking Methods:

- Via robots.txt: GPTBot can be disallowed using the robots.txt file. If you want to prevent GPTBot from accessing any portion of your website, insert the following into your robots.txt file:

- User-agent: GPTBot

- Disallow: /

- Via robots.txt: GPTBot can be disallowed using the robots.txt file. If you want to prevent GPTBot from accessing any portion of your website, insert the following into your robots.txt file:

To selectively allow or disallow directories:

- User-agent: GPTBot

- Allow: /directory-1/

- Disallow: /directory-2/

Using IP Ranges: GPTBot requests originate from specific IP address ranges listed on OpenAI’s site. By incorporating these IP ranges into your .htaccess file, you can block them. However, note that this method may require regular updates.

Important Note: GPTBot excludes paywall-restricted sources, those collecting personally identifiable information (PII), and any violating OpenAI’s established policies.

Training Data for ChatGPT: Originating from Public Datasets

ChatGPT has been trained on various datasets, including Common Crawl (filtered), WebText2, Books1, Books2, and Wikipedia. Focusing on their respective crawlers is crucial to effectively block access from major data sources like Common Crawl and WebText2.

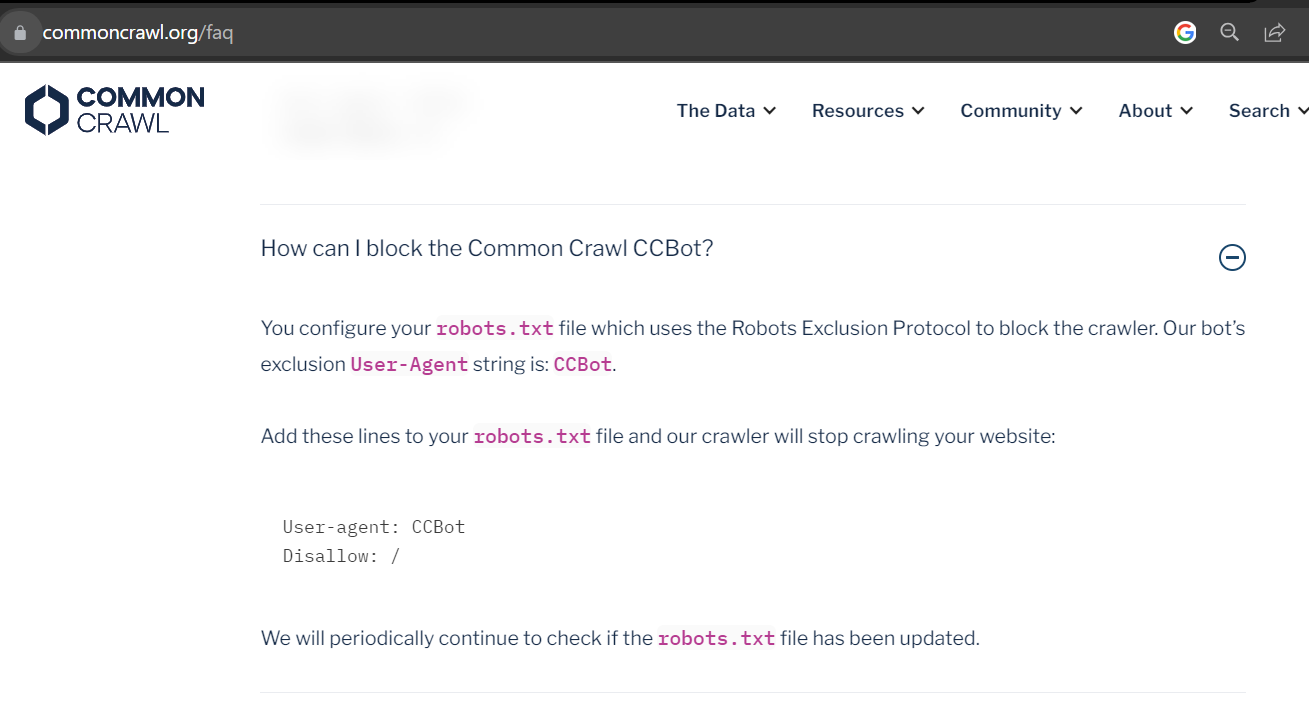

Blocking Common Crawl Bot (CCBot)

Common Crawl provides one of the largest public datasets for training and offers a free repository of web-crawled data. CCBot, which powers Common Crawl, is a primary dataset ChatGPT and other LLMs utilize. The data from this crawler is housed in Amazon’s S3 service.

To prevent CCBot from crawling your website, make adjustments to the robots.txt file in line with the Robots Exclusion Protocol. Specifically, to block the Common Crawl bot, use the following exclusion: User-Agent: CCBot. Incorporate the lines below into your robots.txt file to halt its crawling activities:

- User-agent: CCBot

- Disallow: /

Furthermore, CCBot operates on Apache Nutch, an open-source web crawler software built on Hadoop data structures. This foundation enables the crafting of extensive scrapers. Due to its modular design, Nutch allows developers to devise plugins tailored for many tasks. Regarding restrictions, it’s worth noting that CCBot ranks as the second most blocked AI bot.

About the WebText2 Dataset

WebText2 is a proprietary dataset curated by OpenAI, sourced primarily from Reddit submissions linking to URLs that have garnered three or more upvotes. This dataset played a significant role in training models like GPT-3 and GPT-3.5. A more accessible, open-source variant titled OpenWebText2 also exists. However, OpenAI hasn’t been entirely transparent about the specifics of WebText2, including details about its content, volume, and precise sources. Currently, the user agent for WebText2 remains unidentified.

Blocking ChatGPT-User

ChatGPT-User is a user agent linked with OpenAI. It’s essential to note that, contrary to some beliefs, this user agent is not used to scrape websites. Instead, it’s associated with OpenAI’s web browsing plugin. Moreover, requests originating from ChatGPT-User are not harnessed for training any of OpenAI’s models.

If you wish to block ChatGPT-User from accessing your website, you can make the necessary changes in the robots.txt file. Here’s a general instruction to disallow access entirely:

- User-agent: ChatGPT-User

- Disallow: /

However, if you’d like to restrict access to specific sections of your site, you can do so as illustrated below:

- User-agent: ChatGPT-User

- Allow: /directory-1/

- Disallow: /directory-2/

Bard and Vertex AI

Google has introduced a new product token named “Google-Extended” for use in the robots.txt file. This token allows website owners to manage interactions between their site content and two of Google’s AI services, Vertex AI and Bard. By implementing “Google-Extended,” site administrators can specify which pages are accessible to Vertex AI and Bard.

This development gives website owners greater control over how their content interacts with AI bots. It safeguards against undesired usage of their material and enhances the accuracy and reliability of the content generated by Vertex AI and Bard.

Claude AI powered by Anthropic

Claude is an advanced conversational bot backed by Anthropic’s extensive and proprietary dataset. This dataset has been meticulously refined to uphold the highest standards in safety, ethical considerations, and conversational quality. While Anthropic keeps the intricate details of this dataset confidential to maintain its competitive advantage, Claude may use a general user agent string, such as “AnthropicAI/Claude,” without divulging its specific version or build details. Anthropic might periodically update the user agent strings linked to Claude to mitigate tracking, blocking, or unintended data extraction risks.

Other Ways to Block AI Scrapers

- Advanced Bot Detection Techniques: Employ techniques specifically designed to identify and block sophisticated bots.

- Web Application Firewall (WAF): Install and configure a WAF to filter website traffic. This will enable you to block requests from AI bots by targeting specific user agents or IP addresses.

- Use CAPTCHAs or Proof of Work: Implementing these can deter automated bots by requiring a human-like response or computational proof.

- Monitor Server Logs: Regularly review your server logs for indications of abnormal bot activity.

Keep Sensitive Data Out of LLMs

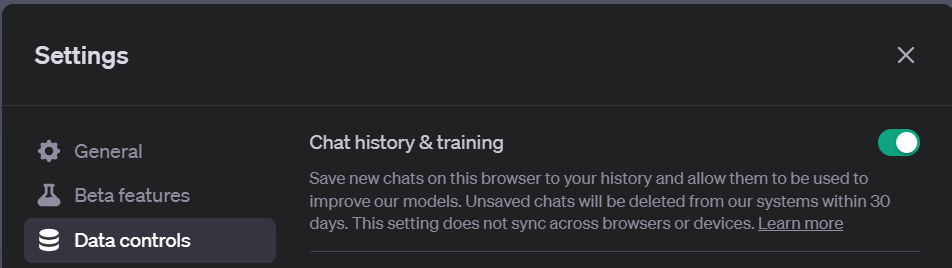

Content creators often use ChatGPT for copywriting tasks that involve private information, such as contracts, strategies, and plans. This practice aids in the model’s training process. This is stated in ChatGPT’s privacy policy, which says:

“We may use your data to train and improve our AI systems, including ChatGPT. This may involve using your data to generate new text or to improve the accuracy of our existing text generation capabilities.”

While OpenAI has introduced a feature to turn off chat history and its use for training, users should be aware that it might not always function as expected.

Conclusion

As of October 2023, two of the most common actions taken by website owners are blocking GPTBot and CCBot. When you block ChatGPT, it’s worth noting that you’re also preventing access to various tools built upon OpenAI’s ChatGPT platform. Continuously monitor for bot activity to mitigate their potential negative impact on your website. A balanced approach ensures that legitimate crawlers aren’t inadvertently blocked, maintaining the health and accessibility of your site.