Table of Content

- The Journey of IoT Data: A Simple Breakdown of ETL

- Powering the Pipeline: Essential Tools of the Trade

- Alternative Tools for Stream Processing and Orchestration

- Building Real-Time IoT Pipelines: Best Practices at a Glance

- Beyond ETL: A Look at Modern ELT

- When ETL Makes Sense

- Beyond the Pipeline: The AI-Powered Advantage

- Your Data Is an Asset—Let’s Unlock Its Value

Imagine your factory floor. A single piece of machinery generates thousands of data points every second—vibrations, temperature, energy consumption. Now, multiply that by every asset in your facility. This is the reality of the Internet of Things (IoT), a constant torrent of raw data. But on its own, this data is just noise. The true value is unlocked when you can swiftly collect, translate, and analyze it to make critical business decisions in real-time.

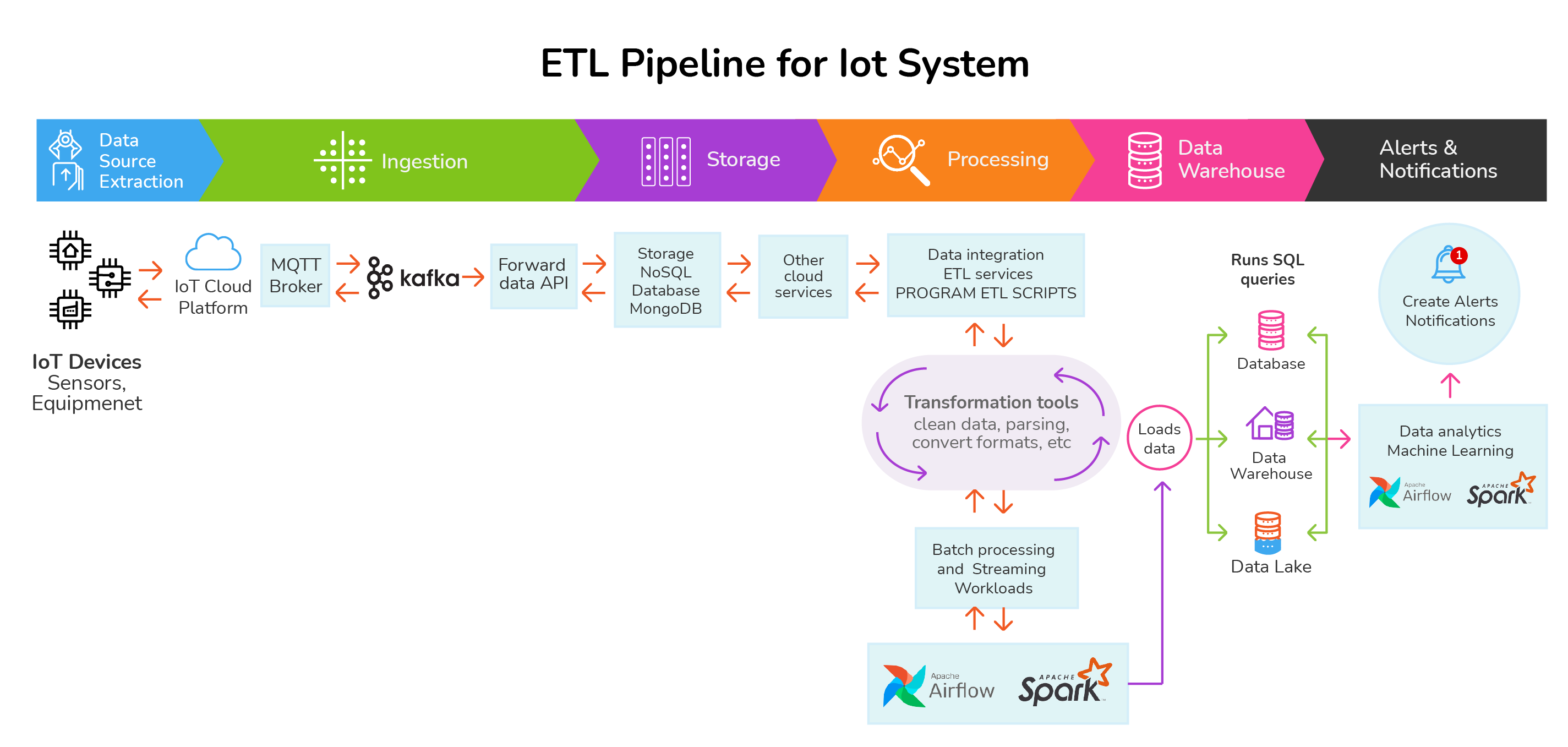

This is where a Real-Time ETL Pipeline comes in. It’s the digital factory floor that transforms that raw, chaotic data into your most valuable asset: actionable intelligence. At its core, an ETL pipeline is a three-step process: Extract, Transform, and Load. Think of it as a sophisticated, automated system for turning raw materials (data) into a refined, valuable product (insights).

The Journey of IoT Data: A Simple Breakdown of ETL

An ETL pipeline is the backbone of any modern data integration strategy. It systematically extracts data from various sources, transforms it into a clean and usable format, and then loads it into a central system for analysis. Let’s walk through what this means in an IoT world.

Extract: Capturing Data from the Source

The first step is to collect raw data from a multitude of IoT devices. This isn’t just sensors on a machine; it can include everything from GPS trackers on a fleet of trucks to environmental sensors in a smart building. These devices often communicate using different lightweight protocols like MQTT to send their data streams efficiently. The challenge here is handling the immense volume and variety of data coming in at high speeds.

Transform: Cleaning, Structuring, and Enriching Your Data

This is where the real magic happens. Raw sensor data—often in complex binary formats or inconsistent structures—is refined. The transformation stage cleans, structures, and enriches the data, making it ready for analysis.

Key transformations in an IoT pipeline include:

- Cleaning: Filtering out irrelevant or inaccurate “noisy data” from faulty sensors.

- Structuring: Parsing complex data formats (like JSON) into a standard, queryable format.

- Aggregating: Summarizing data points over time windows (e.g., calculating the average temperature per minute instead of per second).

- Enriching: Combining the sensor data with other business data—for example, linking a machine’s vibration data with its maintenance history.

Load: Delivering Insights for Decision-Making

Once transformed, the high-quality data is loaded into a destination system, such as a cloud data warehouse or a data lake. From there, it’s ready to be used by analytics dashboards, business intelligence tools, and machine learning models.

Powering the Pipeline: Essential Tools of the Trade

Building a robust, real-time ETL pipeline requires powerful tools designed to handle high-volume data streams. Two of the most important are:

- Apache Kafka: Think of Kafka as the central nervous system for your data. It is a distributed streaming platform designed to ingest and move massive amounts of data from thousands of devices in real-time with very low latency. It acts as a durable, fault-tolerant buffer between your IoT devices and the processing engines.

- Apache Spark: If Kafka is the nervous system, Spark is the powerful brain. It is a high-speed, open-source framework for large-scale data processing. Spark can process both real-time data streams and historical batches, and its in-memory computing capabilities make it exceptionally fast for complex transformations and analytics.

Alternative Tools for Stream Processing and Orchestration

- Apache Flink: A unified engine for stream and batch processing, offering stateful, low-latency analytics.

- Amazon Kinesis (and other cloud-native services like Google Cloud Pub/Sub/Dataflow): Fully managed streaming platforms with native cloud integration and scalability.

- Apache Beam: A flexible, unified pipeline model executable across multiple engines (e.g., Flink, Spark).

- Apache NiFi: A visual, flow-based data routing and ETL tool with built-in security, provenance, and Git-integrated versioning—particularly strong for IoT and hybrid environments.

- Apache Airflow: Python-based DAG orchestration to manage complex ETL workflows.

Building Real-Time IoT Pipelines: Best Practices at a Glance

Elevate your IoT pipeline architecture from functional to future-proof by integrating these foundational practices:

Version Control & Collaborate Like Engineers

Treat your data pipelines as code. Whether it’s SQL transformations, schemas, or configuration files, everything should be versioned using tools like Git. This ensures:

- A single source of truth for all pipeline logic.

- Full traceability—track changes, revert when needed, and audit modifications.

- Structured collaboration—branches, pull requests, and peer reviews become part of the workflow.

Modular Design for Flexibility & Scale

Break your pipeline into smaller, independent components for Extract, Transform, Load, and orchestration:

- Fosters reuse, ease of testing, and simpler debugging.

- Avoids brittle monoliths that are hard to update or scale.

- Supports swappable components, whether using streaming frameworks, connectors, or orchestration tools.

Embed Observability & Monitoring

Visibility is non-negotiable in production pipelines. Build in:

- Comprehensive logging and tracing for all stages of data flow.

- Monitoring of key metrics (e.g., volume, latency, error rates).

- Alerts and dashboards to detect anomalies quickly.

Adopt CI/CD for Data Pipelines

Automation isn’t just for apps—it’s for data too. Apply DevOps principles:

- Automate testing, validation, and deployment of pipelines via CI/CD.

- Separate environments—development, staging, production—ensure changes are vetted before going live.

- Use pull requests and automated tests to enforce quality and reliability.

Design for Fault Tolerance & Error Handling

IoT data sources are dynamic and unpredictable. Mitigate risks by:

- Implementing structured error logging, retries, and fallback mechanisms to maintain resilience.

- Embedding data quality checks and anomaly detection early in the pipeline.

Plan for Scalability & Adaptability

IoT environments evolve—so should your pipeline architecture:

- Leverage parallel processing, microservices, or cloud-native frameworks for scalability.

- Make pipelines idempotent so repeat runs don’t create duplicate data.

- Ensure adaptability to evolving schemas and sources.

Document Everything for Trust and Continuity

Complete documentation builds confidence and accelerates onboarding:

- Maintain a style guide for code clarity and consistency.

- Embed metadata and data lineage into your pipelines for transparency.

- Leverage automated documentation tools to make workflows discoverable.

Beyond ETL: A Look at Modern ELT

While ETL is the foundational model, the rise of powerful cloud computing has given birth to its agile counterpart: ELT (Extract, Load, Transform). The difference is simple but profound: with ELT, raw data is loaded directly into a powerful cloud data warehouse before it is transformed.

This “load first, transform later” approach leverages the immense processing power of modern cloud platforms. For IoT and AI, this is a game-changer. By loading the complete, unfiltered dataset first, data scientists gain maximum flexibility to explore raw data and build more sophisticated AI models. ELT is ideal for handling massive volumes of unstructured data where the final use case might not be known in advance.

The choice between ETL and ELT depends entirely on your business needs, infrastructure, and data strategy. But understanding both is key to building a future-proof system.

When ETL Makes Sense

ETL (Extract, Transform, Load) remains a pragmatic and often superior choice over ELT (Extract, Load, Transform). While ELT is favored in modern, cloud-native environments for its flexibility with large and varied data, ETL offers specific advantages for certain use cases, infrastructures, and industries.

Strict data governance and compliance

In heavily regulated industries like healthcare and finance, data must adhere to strict privacy rules, such as HIPAA and GDPR. ETL allows for sensitive data to be masked, anonymized, or removed during the transformation phase, before it is loaded into the destination system. This prevents raw, sensitive data from ever being stored in the data warehouse, which can help organizations maintain strict compliance.

Upfront data cleansing and validation

If a company’s data sources are known to contain messy, low-quality, or inconsistent data, ETL’s upfront transformation is beneficial. The data can be cleansed, standardized, and validated in a staging area before it is loaded. This ensures a “single source of truth” with high data quality, which is crucial for predictable business intelligence (BI) reporting and decision-making.

Well-defined and stable data models

For organizations with stable, structured data that fits a predictable schema—such as data from ERP or traditional transactional systems—ETL is a reliable choice. The upfront work of defining the transformations is a one-time effort. This approach is more rigid but produces consistent, high-quality data that is always ready for analysis without repeated, ad-hoc transformations.

Legacy or on-premise systems

Companies with significant investments in legacy or on-premise hardware often rely on ETL. Since these systems were not designed for the modern processing demands of large-scale, in-warehouse transformations, performing the T step on a dedicated server is a more efficient use of resources.

Minimize transformation costs in high-cost environments

While ELT is often seen as more cost-effective due to its use of flexible cloud computing, this is not always true. In certain cloud data warehouses, running complex or frequent in-warehouse transformations can become expensive. ETL allows transformations to happen on a separate, potentially cheaper, processing engine, which can lead to lower overall compute costs, especially when dealing with moderate, predictable data volumes.

Batch processing where real-time analysis is not required

Many business intelligence and reporting tasks do not require real-time data. For these use cases, batch ETL jobs can be scheduled during off-peak hours to aggregate sales data, populate reports, or conduct other historical analyses. In this context, ETL can deliver low latency, as the full dataset is processed efficiently in a structured batch, with an emphasis on data completeness over speed.

Beyond the Pipeline: The AI-Powered Advantage

Whether you choose ETL or ELT, the ultimate purpose of a data pipeline is to prepare the clean, steady stream of data needed to fuel the real engine of business transformation: Artificial Intelligence (AI) and Machine Learning (ML).

This is where data becomes predictive. By feeding the high-quality, real-time data from your pipeline into AI models, you can:

- Enable Predictive Maintenance: AI algorithms can analyze sensor data to predict equipment failures before they happen, saving millions in downtime and repairs.

- Automate Anomaly Detection: Instantly identify unusual patterns that could indicate a quality control issue, a security breach, or a safety concern.

- Optimize Complex Operations: Use real-time data to optimize logistics, manage energy consumption across smart facilities, or improve manufacturing yields.

The fusion of AI with IoT data analytics is what transforms a business from reactive to proactive, turning your IoT infrastructure into a source of continuous innovation and competitive advantage.

Your Data Is an Asset—Let’s Unlock Its Value

A well-architected data pipeline is the essential, non-negotiable backbone of any successful IoT initiative. It’s the engine that converts the raw noise from connected devices into the clear, structured data needed for intelligent action.

But building the pipeline is just the first step. The true transformation comes from what you do with that data. As a specialized IoT development company, we don’t just build the data highways—we design the intelligent systems that use that data to drive real-world outcomes.

Ready to turn your IoT data into a strategic asset? Contact our experts today to learn how our AI-powered solutions can bring your data to life. And stay tuned for our next article, where we’ll take a deeper dive into the world of ELT and its impact on modern analytics!

I totally get why you mentioned MQTT for efficient data streams. Another thing to consider is leveraging message queuing systems like RabbitMQ or Apache Kafka that can handle high-volume IoT data ingestion. This allows for easier integration into an etl pipeline, making it a crucial component in a real-time ETL pipeline for an IoT system.

I completely agree with the author that integrating AI development services with IoT data pipelines can unlock immense value for businesses. The ability to predict equipment failures, automate anomaly detection, and optimize complex operations is a game-changer. It’s refreshing to see this blog highlighting the transformative power of AI-empowered IoT analytics. Well done!

Hey there! Great post as usual! I was wondering if you folks have given any thought to incorporating machine learning consulting services in your IoT data pipeline? Would love to hear about how AI can be used to further enhance the value of our precious IoT data. Keep up the awesome work and looking forward to that next article on ELT!

Can you elaborate on how ETL pipeline handles varying IoT data types?

Honestly, it’s pretty basic how an ETL pipeline handles varying IoT data types. It just extracts the data from whatever source, then transforms it into a usable format, and loads it into a central system for analysis. If you’re asking about handling different protocols or formats, I guess that’s where things get a bit more complex. But like, if you have AI development services that can help with that stuff, it shouldn’t be too much of an issue.

Honestly, I’m not really sure how an ETL pipeline handles varying IoT data types. The blog post talks about how it extracts, transforms, and loads the data, but it doesn’t go into too much detail about handling different formats or protocols. It seems like it would be a challenge to accommodate all the different devices and sensors sending in data at high speeds, but I’m not an expert on this topic.

I do think that using AI development services could help with processing and analyzing IoT data, though. Maybe someone who has experience with those types of tools could weigh in on how they handle ETL pipelines?

Honestly, I don’t think the blog post goes into too much detail about handling varying IoT data types. It’s a complex topic, but I’d say that tools like Apache Kafka and Spark are designed to handle high-volume and varied data streams in real-time. Perhaps someone with experience in machine learning consulting could provide more insights on how these pipelines work with different formats or protocols. The author does mention that an ETL pipeline can be used to extract, transform, and load IoT data, but it’s a bit vague about the specifics of handling different devices and sensors.

I’m still not entirely sure how an ETL pipeline handles varying IoT data types. I mean, it’s a complex process that involves extracting, transforming, and loading data from multiple sources. But what about when those sources are producing different formats of data? Does the ETL pipeline just magically adapt to whatever format is coming in?

From my understanding (which isn’t super deep), the Transform stage seems to be where all the magic happens. It’s here that raw sensor data gets cleaned, structured, and enriched before being loaded into a central system for analysis. But I’m still not convinced that this is enough to handle the diversity of IoT data types.

I guess what I’m getting at is: how do ai companies (and others) ensure their ETL pipelines are robust enough to handle these varied formats?

Meh, yeah I get what you’re saying. So the Transform stage does handle varied formats, but it’s not like magic happens or anything. It’s just about using tools and machine learning consulting to prep the data. That’s why companies use stuff like Apache Spark and Kafka to make it all work smoothly.

I completely agree with the explanation on how ETL pipeline handles varying IoT data types! It’s indeed a complex challenge. The Transform stage is particularly crucial in this process, where raw sensor data is refined through cleaning, structuring, and enriching. This is especially true for machine learning consulting applications, where accurate data processing is essential for model training and deployment. Thank you for breaking down the ETL pipeline process so eloquently!

Hey thanks for sharing this! I completely agree that combining IoT data analytics with AI can be super powerful. One thing to consider is the role of Edge Computing in IoT systems – it allows for real-time processing and decision making at the edge, reducing latency and improving overall system efficiency. This is especially important for use cases like predictive maintenance, where every second counts! I’ve seen some ai companies leveraging edge computing in their solutions too.

Interesting article! I’ve worked on similar projects in IoT and can attest to the complexity of handling diverse protocols like MQTT. One thing that’s often overlooked is the importance of data validation in real-time ETL pipelines. In my experience, this step is crucial in ensuring that only accurate data makes it through to the analysis stage. Machine learning consulting can also play a role here, as techniques like anomaly detection can be applied to identify and filter out noisy data before it’s even processed.

It’d be great to see more discussion on best practices for implementing these ETL pipelines, especially when working with legacy systems or in highly regulated environments. Have you considered exploring the use of event-driven architectures or cloud-based services like AWS IoT Core?

I’m loving this post about building a real-time ETL pipeline for IoT systems! It’s so true that strict data governance and compliance are essential in industries like healthcare and finance. AI companies are already leveraging ETL to ensure seamless data transformation and masking. I’d add that using a cloud-based ETL tool can also help with scalability and automation, making it easier to manage big data 📈👍

I loved this post! As a dev who’s worked with IoT for years, I gotta say, stream processing is where it’s at. AI companies like Google are already ahead of the curve with their cloud-native services. Have you considered using Apache NiFi? It’s a game-changer for IoT environments – super secure and scalable. My company uses it to process sensor data in real-time. Keep up the good work!

I’m loving this post on building real-time ETL pipelines for IoT systems! As an AI engineer, I know how crucial it is to have robust tools like Apache Kafka and Spark to handle high-volume data streams. In fact, many AI companies are now leveraging these open-source frameworks to drive insights and decision-making. Thanks for sharing your expertise!