Table Of Content

- Add Machine Learning to IoT Edge Devices Using TensorFlow Lite Framework

- TensorFlow Lite for Microcontrollers

- Benefits of Using TensorFlow Lite for IoT Edge Devices

- Machine Learning Models Features (Examples) with TensorFlow Lite

- Converting TensorFlow Models to TensorFlow Lite

- Hardware Platforms (IDE) for ML in IoT Edge

- TensorFlow Lite Supported Devices

- TensorFlow Consulting

Add Machine Learning to IoT Edge Devices Using TensorFlow Lite Framework

Businesses looking to create intelligent solutions are adding machine learning capabilities to appliances, toys, smart sensors, and other IoT devices. These low-powered devices are embedded with ML and developed to solve new problems. Product developers create new features by defining specific business problems and building the logic of how a prediction provides a solution. These solutions work by feeding significant data into an algorithm that is trained and builds a model (program) to predict a situation (inference).

Machine learning in IoT systems enables the development of new applications in smaller devices with limited memory and computational power (resource constrained) at the Edge (IoT Edge).

You can get started by leveraging pretrained machine learning models to deploy on Edge devices embedded with microcontrollers using TensorFlow Lite—an open-source set of tools and libraries executed with Python. Mobile apps and Edge devices can be enhanced by implementing machine learning capabilities for many domains using models for object detection, image classification, pose estimation, video and audio classification, etc.

TensorFlow Lite for Microcontrollers

A machine learning model is packaged into an app and deployed in a Raspberry Pi at the Edge. TensorFlow developers can integrate an existing machine learning model into your mobile app by running TensorFlow Lite libraries and APIs. Also, these models are customizable for particular input datasets by writing a few lines of code.

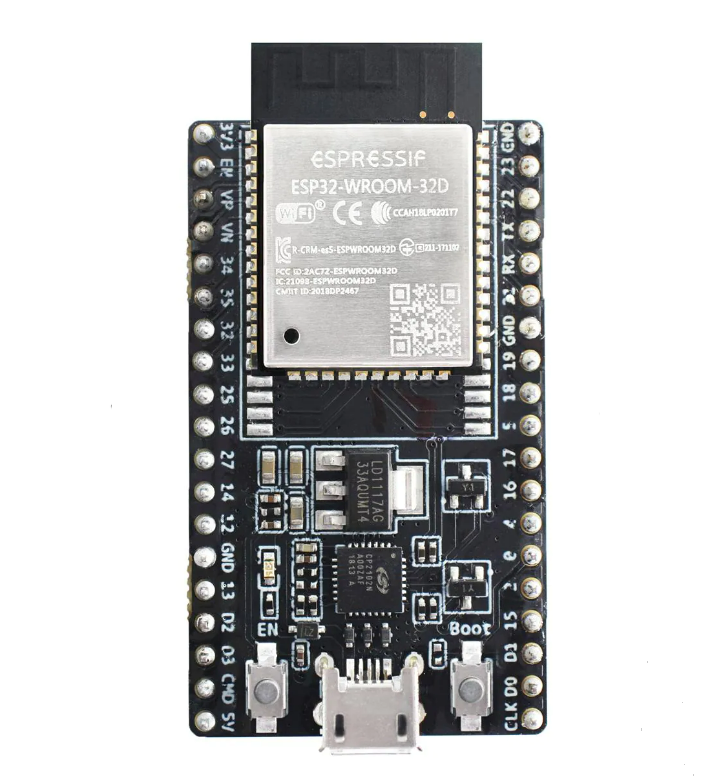

Other IoT use cases focus on designing products with embedded microcontrollers with limited operations and model sizes running TensorFlow Lite to perform ML computations. These are ARM-based processors and ESP32 SoC series. Converting and adapting a specific ML model to your use case may require certain techniques to retrain a model and fine-tune, evaluate, and create accurate predictions.

Developing IoT products that don’t require a power supply means building low-cost products with machine learning software and local actionable data. Machine learning engineers create these solutions for clients based on target devices and considerations about model optimizations during the app development process. They work with quantization techniques to balance the size and latency against the accuracy of the model.

At Krasamo, we have worked with embedded software development and have developed machine learning software for more than a decade, giving us the expertise to support new projects for partners.

Benefits of Using TensorFlow Lite for IoT Edge Devices

- Free and open-source with available resources (TensorFlow repositories)

- Optimized for lower latency, connectivity, privacy, and power consumption

- Supports iOS, Android, Embedded Linux, and Microcontrollers

- Supports Swift, Objective C, Java, C++, and Python

- Integrates with Apple Core ML and Android Neural Networks API (NNAPI)

- Hardware accelerators and custom delegates for GPU, DSP, and TPU

- Model Optimization for Edge Devices

Machine Learning Models Features (Examples) with TensorFlow Lite

You can add machine learning capabilities to IoT products using TensorFlow Lite pretrained (off-the-shelf) models that you can start using and extend or customize (for domain-specific) as your app evolves. You can build a model using the TensorFlow Lite core libraries and APIs to deploy in your mobile app and use TF Lite Model Maker for customizing models. TensorFlow will provide several models, and you must experiment to find the optimal one.

TensorFlow developers design a model architecture according to the type of problem, data accessibility and its transformation process, and the device constraints.

Converting TensorFlow Models to TensorFlow Lite

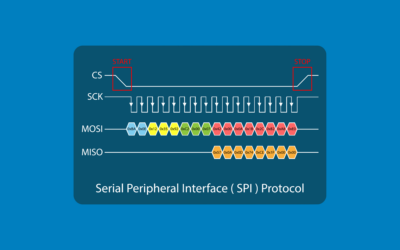

TensorFlow models are converted to fit in microcontrollers using the TensorFlow Lite converted Python API. Then, TensorFlow Lite models are compressed to a FlatBuffer (data format that reduces space) to use TensorFlow Lite operations.

The TensorFlow Lite converter eliminates the need to transform data (parsing/unpacking), reduces the model size, and speeds the inferencing in IoT devices. TensorFlow developers work to determine if the model operations are supported by the runtime environment. There are several ways to approach the model’s conversion, with different input model formats and additional steps that determine if the model will work on Edge devices.

Although not all operations of TensorFlow are supported by TensorFlow Lite, there are compatibility considerations and conversion options to work around, like modifying the model and adding a customized runtime environment. You can build a TensorFlow Lite model and embedded application using one of the following models and train it on a dataset. TensorFlow will help analyze all the data produced by devices in real time.

- Speech Recognition—perform sound classification

- Image Classification—identify image representations (image classes)

- Object Detection—identify object position inside a video or image

- Pose Estimation—computer vision technique that estimates the pose of a person in frames by the location of body joints from a video or image

- Image Segmentation—detect target objects within an image

- Audio Classification—find patterns and extract information from dominant using a spectrogram

- Video Classification—predict the probabilities of an image class/action recognition, etc.

- Optical Character Recognition—extract text from images

- Person Detection—using camera sensors

- Gesture detection—using a motion sensor

After the model is trained, evaluated, and converted to the TensorFlow Lite format, it should be optimized and quantized. Then, the model is tested to see how it is predicting, making comparisons, analyzing graph charts, and other methods. Finally, the model is transformed into a C file and set within the environment to run with the embedded application. A TensorFlow Lite for microcontrollers program goes through testing in a local environment before implementing the deployment process into microcontrollers (Edge devices).

Hardware Platforms (IDE) for ML in IoT Edge

TensorFlow developers understand the different capabilities, tools, and APIs of microcontroller platforms and how to customize implementations for running machine learning on Edge devices. The following are the main platforms for embedded development:

- Arduino IDE

- Mbed

- ESP IDE

TensorFlow Lite Supported Devices

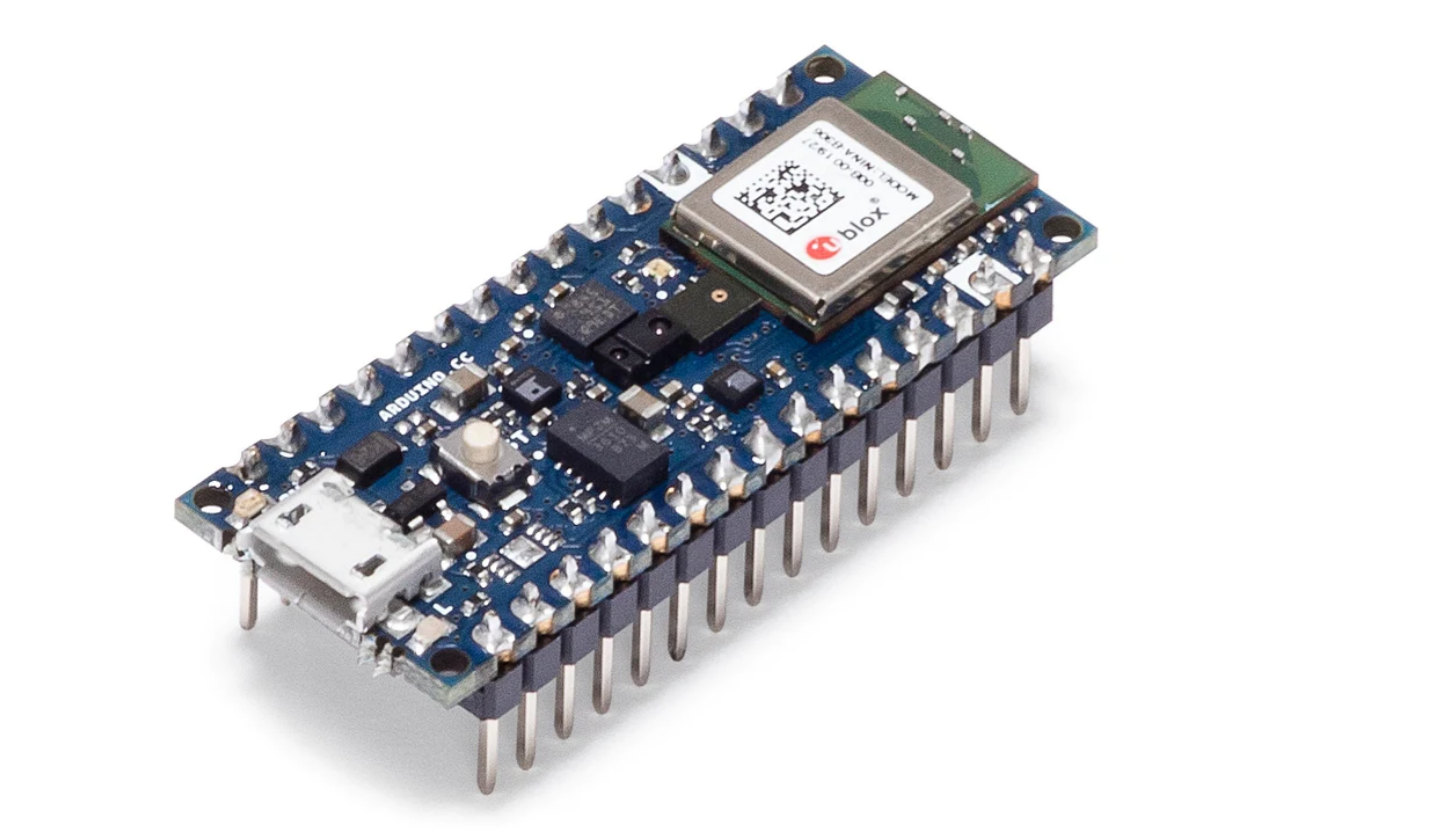

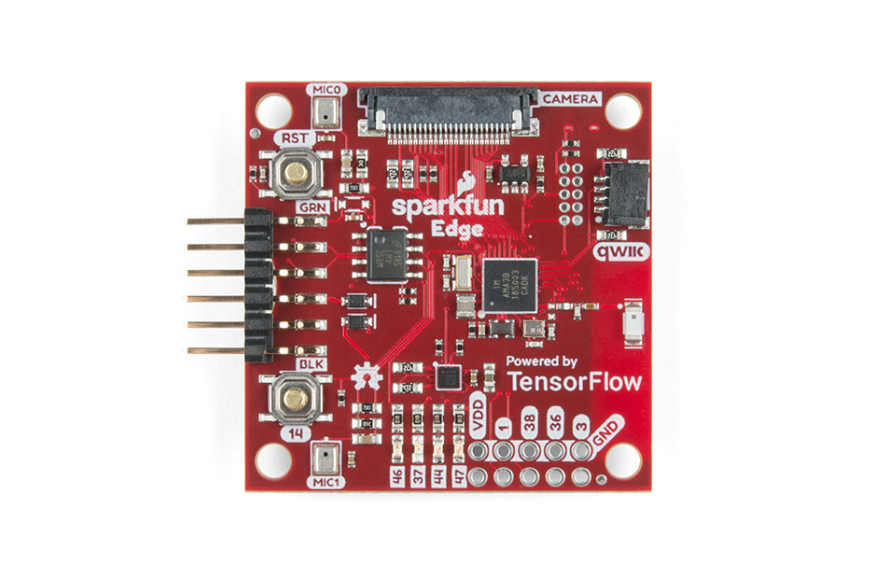

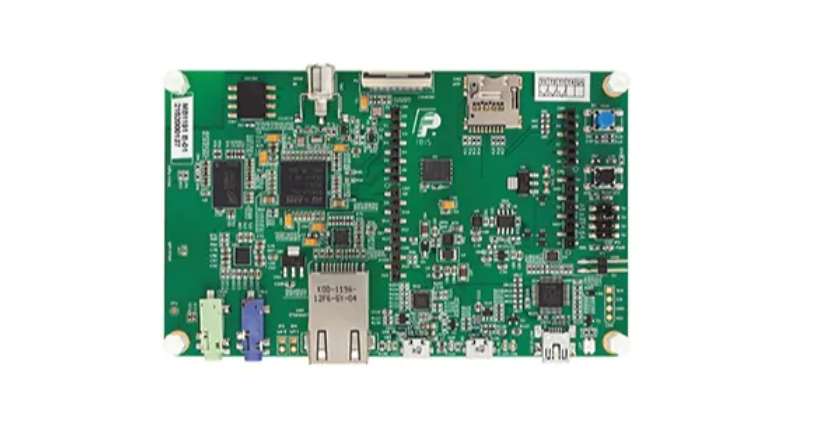

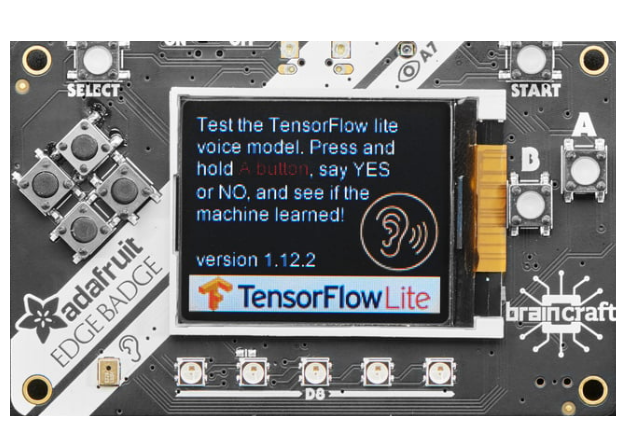

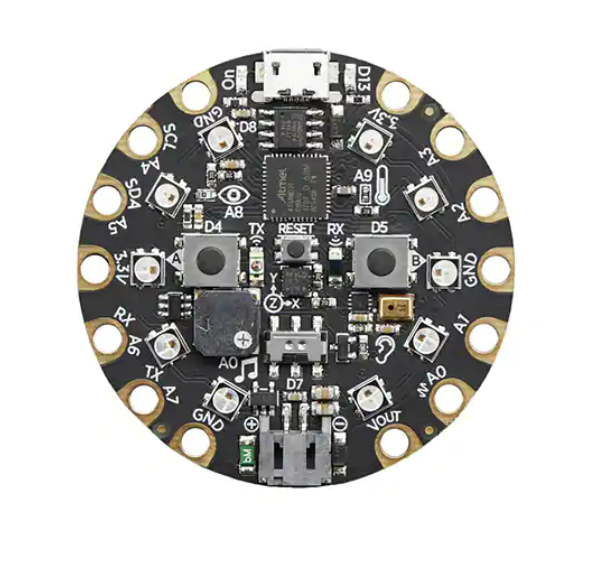

The following devices have been tested with TensorFlow Lite and have components (microphone, camera, sensors), capabilities, and special functions that can be adapted to several machine learning use cases in IoT.

TensorFlow Consulting

Krasamo is a Texas-based IoT development company with expertise in machine learning that can provide TensorFlow developers for your project.

IoT development teams are creating cutting-edge IoT devices with machine learning capabilities. Talk to our IoT and machine learning engineers to discuss your next design or prototype build.

0 Comments