Table of Content

In the last decade, traditional businesses have been digitally transforming at an unprecedented pace. This era of digital disruption has been marked by the emergence of innovators who completely reshaped their respective industries.

For businesses that have learned to manage and operate with data efficiently (DataOps), implementing generative AI is the natural next step in their competitive journey.Implementing these technologies promises new and more efficient products, user experience enhancements, and operational efficiency improvements–necessary to sustain competitive advantages.

However, LLMs face inherent limitations due to their reliance on static training data. This data caps their knowledge at a certain point and makes adapting to new, domain-specific information challenging.Retrieval-augmented generation (RAG) emerges as a key innovation, enabling LLMs to dynamically extend their knowledge base by referencing up-to-date, authoritative external data sources.

Companies adopting AI are developing solutions incorporating large language models (LLMs) and generative AI applications enhanced by Retrieval-Augmented Generation (RAG) capabilities.Knowledge-intensive tasks require external data retrieval and prompts to extend the functionality of LLMs. By augmenting an LLM with domain-specific business data, organizations can craft AI applications that are both agile and adaptable to their environment.

Creating a tailored generative AI solution involves customizing or modifying existing task-specific models to align with your business objectives.To get started, our AI engineers at Krasamo can customize AI agents, AI chatbot, and AI enterprise search solution, or other applications with data retrieval capabilities and a large language model (LLM). A general-purpose LLM, enhanced with APIs for chaining domain-specific data, offers a cost-effective approach to building generative AI applications.

On this page, we explore concepts related to retrieval-augmented generation (RAG) and the knowledge sources that support it, paving the way for deeper discussions on GenAI development with our business partners.

What Is Retrieval-Augmented Generation?

Retrieval-Augmented Generation is a method for enhancing Large Language Models (LLMs) by using external data sources to increase the model’s reliability and accuracy.

Because LLMs typically rely on parameterized data (without ongoing access to external sources), their functionality is limited: they cannot easily revise or expand their knowledge, nor can they provide insight into their predictions. Their parameters represent the patterns of human language use—implicit knowledge—so while they respond quickly to general prompts, they often lack domain- or topic-specific information and may produce incorrect answers or hallucinations.

Developing Retrieval-Augmented Generation (RAG) functionality fine-tunes or enriches an application by connecting the LLM with specific external knowledge, overcoming these limitations and delivering more accurate, context-aware responses.

How Does Retrieval-Augmented Generation Work?

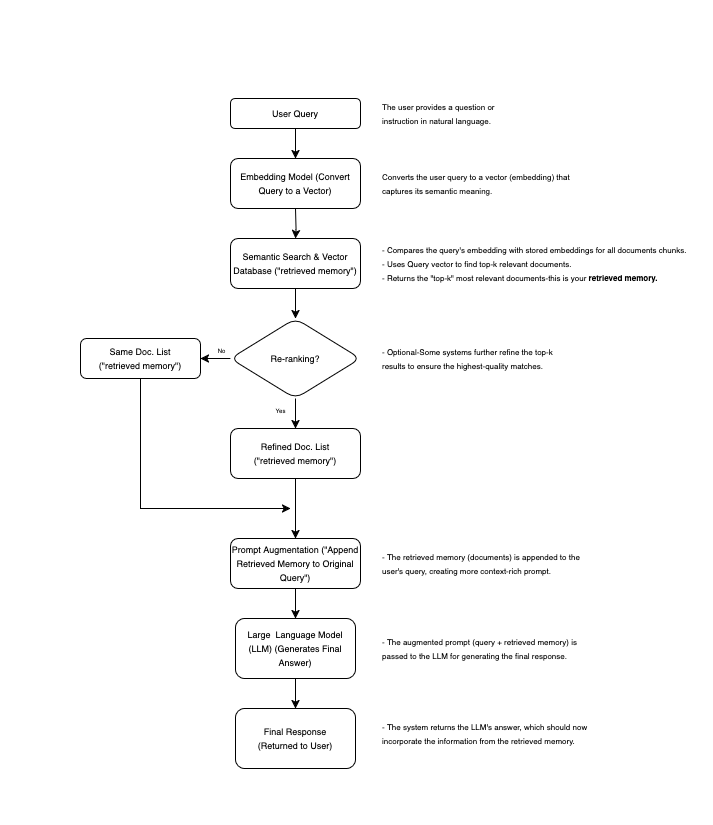

RAG follows a three-step cycle:

- Retrieve: Find the most relevant information (or “memories”) from external data sources

- Augment: Inject or append those relevant memories into the prompt

- Generate: Use the augmented prompt to produce the final answer with the LLM

A Krasamo developer can easily connect an LLM to your company datasets (enterprise knowledge base) to build a generative AI application that provides accurate responses for your business use case. This approach offers a simpler alternative to retraining a model. Below is a general overview of the RAG process.

RAG Inference Process

1. Data Ingestion

The process begins with importing documents from various external sources, such as databases, repositories, or APIs. These documents could be in PDF format or other forms and may contain knowledge that wasn’t available during the foundational model’s initial training.

2. Chunking and Document Splitting

When dealing with large or lengthy documents (e.g., entire PDFs or books), it’s common to split them into smaller, more manageable “chunks.” Chunking helps ensure more precise retrieval and avoids token limits when you create embeddings. By working with short “memories,” the system can more accurately locate relevant information.

3. Document and Query Conversion

The imported documents (PDFs, files, long-form texts) and any user queries must be converted into a format that allows relevancy searches through embedding models.

4. Embedding Process

The core of RAG’s functionality lies in transforming textual data into numerical representations through embeddings. Embedding is a critical step that converts both the document collection (knowledge library) and user-submitted queries into vectors, enabling the machine to understand and process textual information.<

This numerical representation of the text is a vectorized form of information. For example, the sentences “when is your birthday” and “on which date you were born” will have very similar (or nearly identical) embeddings. (You can use existing task-specific models to provide embeddings for prompts and documents.)

Choosing the Right Embedding Model

- If your application deals with highly specialized vocabulary (e.g., medical or legal), consider a domain-specific embedding model.

- For multilingual use cases, a multilingual embedding model may be preferable.

- Larger models can yield more nuanced representations but come with higher computational costs. Balance performance with resource requirements.

5. Relevance Search and Augmentation

The RAG model compares the embeddings of user queries with those in the knowledge library to find matching or similar documents—often referred to as “retrieved memories.” It then appends the user’s original prompt with these memories, injecting fresh context aligned with the user’s intent.

Semantic search is performed to retrieve contextually relevant data. It provides a higher level of understanding and precision that closely mirrors human-like comprehension. Unlike traditional search methods that rely heavily on matching keywords, semantic search interprets the context and nuances of the user’s query, enabling it to grasp the user’s intent more accurately.

Example: If the user query is “Remember my favorite song,” semantic search can match stored fragments mentioning “jazz,” “concert,” or even specific titles.

This mapping is achieved through sophisticated algorithms that understand the semantic meaning of the query, identifying the most relevant information across a vast array of documents.

The vector database then retrieves the closest vectors, which map back to the original pieces of text. The most relevant text is presented in the final prompt to the LLM. Although it can still be lengthy, the prompt is now distilled to contain only the most relevant fragments.

Re-ranking & Hybrid Approaches

In more complex or high-stakes scenarios, a second “re-ranking” step can further refine the top matches to ensure the highest-quality document fragments are appended. Some solutions also combine keyword-based retrieval with embeddings in a hybrid search approach to handle exact phrases or names effectively.

6. Prompt Augmentation

The selected relevant documents are used to augment the original user prompt by adding contextual information. This augmented prompt is then passed to the foundational model to generate a response—enhancing the model’s ability to provide accurate and contextually rich answers.

Prompt Engineering Details

- Place retrieved context in a clearly marked section (e.g., “Relevant Context: …”) so the LLM knows which text is reference material.

- Keep prompts concise to avoid exceeding token limits; prioritize the most relevant fragments.

- Use instructions or system prompts to guide the LLM’s style and reduce hallucinations.

7. Continuous Update

Knowledge libraries and their embeddings can be updated asynchronously (on a recurring basis) to ensure the system evolves and incorporates new information over time.

Maintaining Vector Database Freshness

- Regularly re-embed or update chunks for documents that change frequently.

- Consider versioning if needed to track older or archived documents.

Software Components

Building a retrieval-augmented generation (RAG)–based application requires expertise in several software components and operations with data pipelines (LLMOps).

Some components are foundational models, frameworks for training and inferencing, optimization tools, inference serving software, vector database tools to accelerate search, model storage solutions, and data frameworks such as LangChain (open-source) and LlamaIndex for interacting with LLMs.

Performance and Scalability Tips

- Approximate Nearest Neighbor (ANN): For large-scale vector searches, libraries like FAISS or HNSW provide fast lookups by approximating nearest neighbors.

- Index structures (e.g., IVF, HNSW) help balance retrieval speed with accuracy.

- Plan GPU resources carefully when embedding large volumes of text or querying at scale.

Security and Access Control

- In enterprise settings, enforce role-based access control (RBAC) to ensure users only retrieve data they’re permitted to see.

- Some vector databases and frameworks support row-level security or tenant isolation.

RAG Use Cases

- Web-based Chatbots: Power customer interactions with a chat experience that responds to questions with insightful answers.

- Customer Service: Improve customer service by having live service representatives answer customer questions with updated information.

- AI Enterprise Search: Empower employees to query internal documentation to retrieve information.

- Tabular Data Search: AI can instantly navigate vast data sets to find insights, enhancing decision-making.

Retrieval-Augmented Generation Workflow: Visual Overview

Benefits of Retrieval-Augmented Generation (RAG)

It is difficult to identify which knowledge an LLM already possesses and to update or expand that knowledge without retraining. As models grow larger to store more knowledge, the process becomes computationally expensive and less efficient.

By building RAG applications, we enable a model to fetch relevant documents from a large corpus based on the input’s context and gain the following benefits:

1. Overcoming Implicit Knowledge Storage

RAG addresses the limitations of relying solely on a model’s internal parameters by adding interpretability, modularity, and scalability.

2. Transparent Prediction

Retrieving external documents as part of the prediction process clarifies which knowledge the model draws on to form its answers.

3. Flexible Updates

Separating retrieval from the predictive model allows for quick updates to the knowledge source without having to retrain the entire model.

4. Efficiency

RAG applications leverage external documents, potentially reducing the size and computational overhead of the core model.

5. Improved Performance on Knowledge-Intensive Tasks

By focusing on domain-specific or specialized information, RAG significantly boosts accuracy where deeper knowledge is required.

Evaluation & Testing

- Track Metrics

Monitor “top-k retrieval accuracy” to see if the most relevant documents appear in the top results. - Iterate with Feedback

Gather user input to fine-tune chunk sizes, prompt structure, or re-ranking methods. - Manual Review

Periodically review outputs to ensure the system reliably retrieves and generates high-quality answers.

Retrieval-Augmented Generation (RAG) lets organizations leverage the power of LLMs while continuously refreshing and refining their knowledge sources. By embedding domain-specific data, employing semantic search, and carefully engineering prompts, businesses can build agile, scalable, and accurate AI solutions—without retraining massive models for every update. The result is a dynamic, future-proof approach to generative AI that keeps pace with the evolving needs of modern enterprises.

I gotta respectfully disagree with some of the points made in this post! As a CS analyst who’s worked extensively with LLMs, I think the author is oversimplifying the benefits of RAG (retrieval augmented generation). What about the limitations of retrieval? Don’t we risk over-reliance on external data rather than truly understanding the model’s internal workings? And what about the trade-offs between accuracy and efficiency? Let’s dive into this further!